AI is not just “growing up.”

AI is entering a pressure phase.

Pressure from legal risk.

Pressure from regulation.

Pressure from energy costs.

Pressure from enterprise contracts.

Pressure from liability in courts.

From 2023–2024, the key question was:

“How powerful is the model?”

In 2026, the real question is:

“Who takes the risk when it fails?”

Markets are still pricing AI like a growth story.

It is already a liability story.

This analysis draws on:

• Spellbook 2026 contract data

• Peer-reviewed hospital AI liability research (Frontiers in Public Health, 2025)

• Public publisher traffic disclosures

• Apple 2026 DRAM supply-chain reporting

• IDC Cloud FutureScape 2026 forecasts

• Active US and EU regulatory developments

This is not hype.

This is structural market analysis.

QUICK SUMMARY (30-Second View)

AI competition is no longer about intelligence alone.

It is about risk transfer, governance, and survivability.

What changed in 2026?

Smarter models matter less.

Legal accountability matters more.

Google’s real risk is not compute cost.

It is ecosystem damage.

Apple is not “behind.”

It is redesigning hardware for on-device AI.

Healthcare AI does not scale like SaaS.

It scales like regulated medical devices.

Public AI is convenient.

Private AI is becoming preferred.

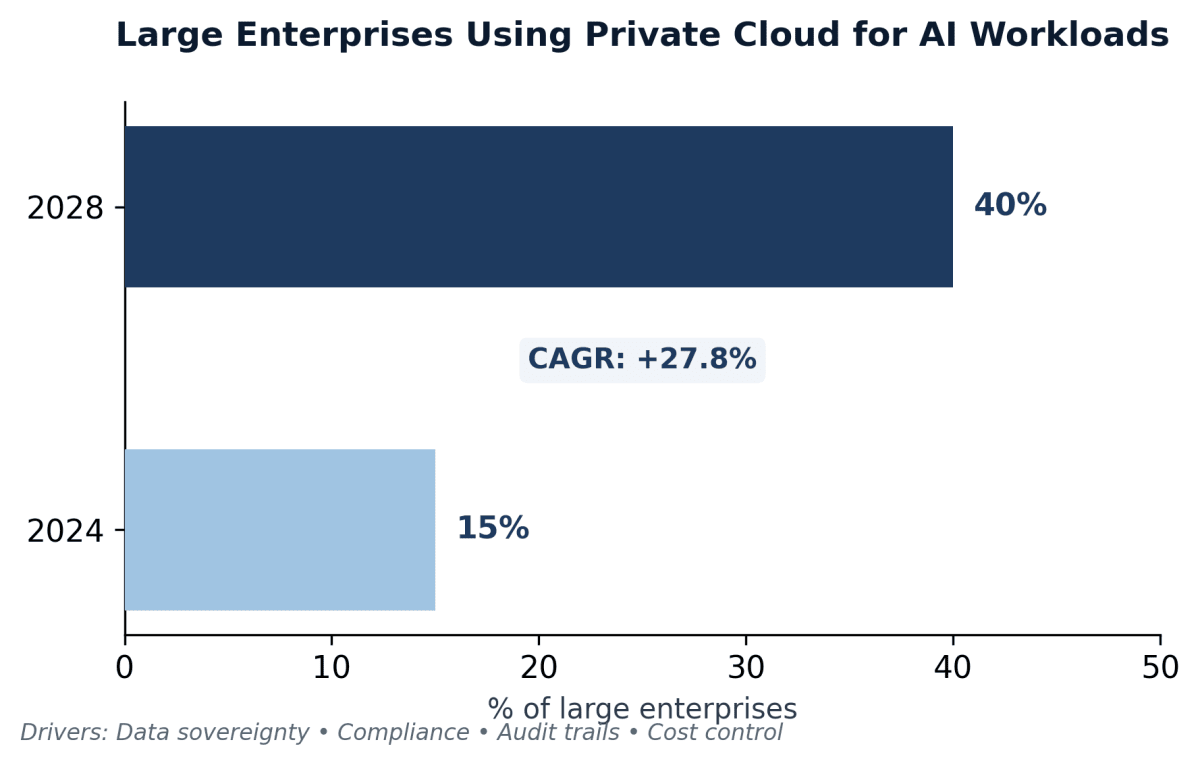

IDC projects that 40% of large enterprises will move meaningful AI workloads to private or hybrid environments by 2028.

1. ENTERPRISE CONTRACTS: RISK IS NOW THE PRODUCT

Spellbook's 2026 State of Contracts Report analyzed hundreds of thousands of agreements.

Key findings:

• 18% of SaaS contracts now include AI usage clauses.

• When indemnification is included, clients are favored 3:1.

• 27% of master agreements still include no AI indemnification.

This tells us something important.

Companies are not just buying intelligence.

They are buying legal protection.

Open-source AI is powerful.

But legal teams prefer vendors they can hold accountable.

Hidden structural loser:

Internal AI teams without legal backing or executive sponsorship.

AI budgets are moving from experiments to contract-controlled deployments.

2. GOOGLE SEARCH: THE REAL RISK IS NOT COST

Industry estimates suggest Google handles roughly 8 billion searches per day (various analyst and market estimates).

If AI adds $0.0003 to $0.001 per query, annual added cost could range from about $876 million to nearly $3 billion.

Google's quarterly ad revenue is over $66 billion.

So compute cost alone is manageable.

The bigger issue is traffic flow.

Publishers report:

• Up to 44% lower click-through rates after AI summaries.

• Some traffic drops from 50–80% of total visits down to 20–30%.

• Users are significantly less likely to click links when AI answers appear.

If publishers weaken, content supply weakens.

If content weakens, search quality weakens.

Clear prediction:

By Q2 2027, BuzzFeed will publicly attribute measurable revenue pressure to AI-driven search answer extraction.

This is a checkable claim.

3. APPLE: AI STRATEGY WRITTEN IN SILICON

Apple requested Samsung to explore discrete DRAM packaging for 2026 iPhones.

Why this matters:

• More memory bandwidth

• Better heat control

• Stronger on-device AI performance

This is not marketing.

This is hardware redesign.

Apple's layered approach:

• On-device small model for private tasks

• Cloud model (Gemini integration) for public knowledge

• Local AI hub using M-series chips for personal data

Apple is separating public intelligence from personal intelligence.

The open question:

If cloud AI becomes far more capable, will privacy alone be enough to compete?

4. HEALTHCARE AI: LIABILITY SLOWS EVERYTHING

A 2025 study in Frontiers in Public Health examined AI hospital deployment.

Findings:

• Even assistive AI creates product liability exposure.

• Courts often place responsibility on hospitals and doctors.

• FDA Software-as-Medical-Device approval requires validation and monitoring.

Healthcare AI adoption depends on legal clarity.

Not just technical accuracy.

One hospital executive summarized it clearly:

"The model worked. The exposure didn't."

5. PRIVATE AI: CONTROL OVER CONVENIENCE

IDC Cloud FutureScape 2026 projects that 40% of large enterprises will move AI workloads to private cloud or hybrid environments by 2028.

Reasons include:

• Data sovereignty laws

• Compliance requirements

• Predictable cost models

• Audit trail control

Enterprise architects report seeing RFPs that disqualify vendors without tenant isolation and clear data deletion guarantees.

This shift favors:

• Hybrid AI vendors

• Enterprise-focused model providers

• Chipmakers and infrastructure firms

It pressures:

• Pure public-LLM providers

• AI add-on features without pricing power

6. REGULATION IS NO LONGER THEORETICAL

The EU AI Act is entering implementation stages across member states.

In the United States, the FTC continues investigations into AI-related claims under Section 5 authority.

Major copyright cases, including The New York Times v. OpenAI, remain active and could shape training data rules.

If large-scale training is restricted or damages are enforced at scale, financial exposure increases sharply.

Contracts are already adapting to this risk.

THE STRUCTURAL DIVIDE

Two models are emerging:

Capability-first companies

• Fast innovation

• High scaling

• Higher regulatory exposure

Governance-first companies

• Slower rollouts

• Strong compliance posture

• Lower legal volatility

Long-term winners must combine both.

WHAT WOULD PROVE THIS WRONG

This thesis fails if:

• AI inference cost collapses faster than expected

• Publisher revenue stabilizes despite AI summaries

• Regulation remains weak in enforcement

• Open-source ecosystems develop enterprise-grade indemnification at scale

If these happen, consolidation pressure weakens.

FINAL THOUGHT

Infrastructure is not adopted.

It is endured.

The first phase of AI was about capability.

The next phase is about accountability.

The signals are visible:

• 18% of SaaS contracts now include AI clauses

• Publishers report major traffic drops

• Apple is redesigning memory for AI performance

• Hospitals face liability exposure

• 40% of enterprises are moving toward private AI

AI will not be decided by the smartest demo.

It will be decided by who survives contracts, courts, and cost pressure.

The consolidation phase has already begun.

FAQ (Frequently Asked Questions)

What is the AI consolidation phase?

The AI consolidation phase is the shift from rapid model innovation to legal, regulatory, and enterprise risk control.

Why are enterprises moving AI to private cloud?

Enterprises want audit trails, compliance control, predictable costs, and reduced liability exposure.

What is the biggest risk for Google AI search?

Not compute cost. The bigger risk is reduced publisher traffic and ecosystem imbalance.

Why does healthcare AI adopt slowly?

Because FDA validation, liability exposure, and legal standards slow deployment.